In previous articles, I discussed Testability and API Boundries when using The Composable Architecture. This week I will dive into performance and a design pattern you might consider: multi-store. This pattern can improve performance and enforce rigid API boundaries.

How are actions processed in TCA?

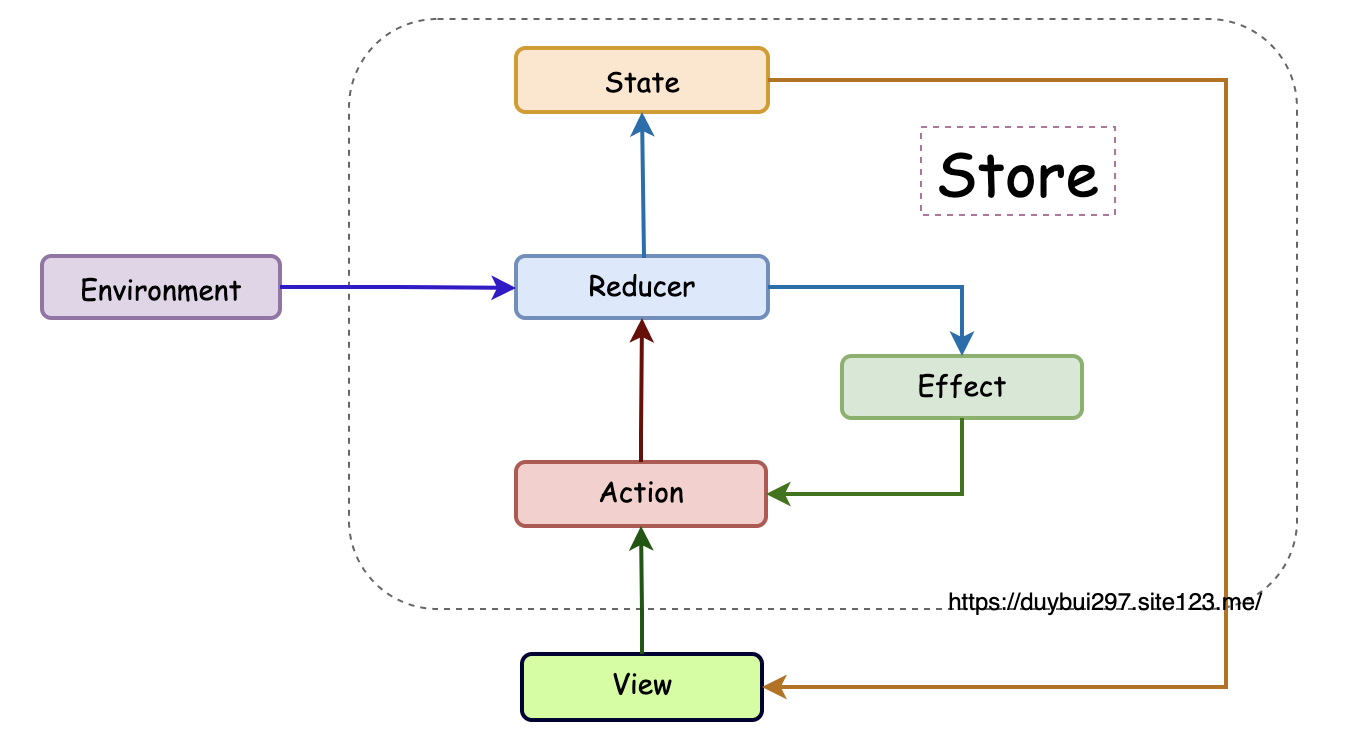

TCA implements a Unidirectional Data Flow (UDF). Using a UDF ensures that events and data in your application moves in a consistent and predictable manner. Following this pattern can reduce data inconsistencies because the source of truth for your application is the same throughout the whole application.

UDF in TCA

In TCA, the State managed by the root Store is the source of truth for your application. As different parts of the application need less and less of the overall application state, you use scope functions for whittling the State down into smaller components. One interesting aspect of the State is that it is not required to be Equatable , and there is no dependency graph through the application that is maintained automatically by TCA.

Why is this important?

Whenever we process actions in TCA, the architecture has to assume that the Root State has changed, and call all of our scope functions again and again.

Those scoping functions will be called tens of thousands of times during user sessions, and as such, you need to be careful what code you put in them. You should avoid any calculations in that function and have as simple operations as possible.

Even sending a no-op action into TCA system will use significant cpu / main thread processing time because of all the scoping calls.

Example scenario and some numbers

To give you a sense of the impact that this trait of architecture has in larger applications, let’s look at The Browser Company’s Arc.

We have built a native note-taking feature, Notes, into the browser. In the following example, for consistency when comparing performance, we will type 5 lines of text and record the number of events sent through TCA.

💡

We have custom instrumentation added into TCA on our fork, but we are collaborating with the PointFree team to upstream that for anyone.

Nonetheless, it’s a great architectural trait to track such detailed information.

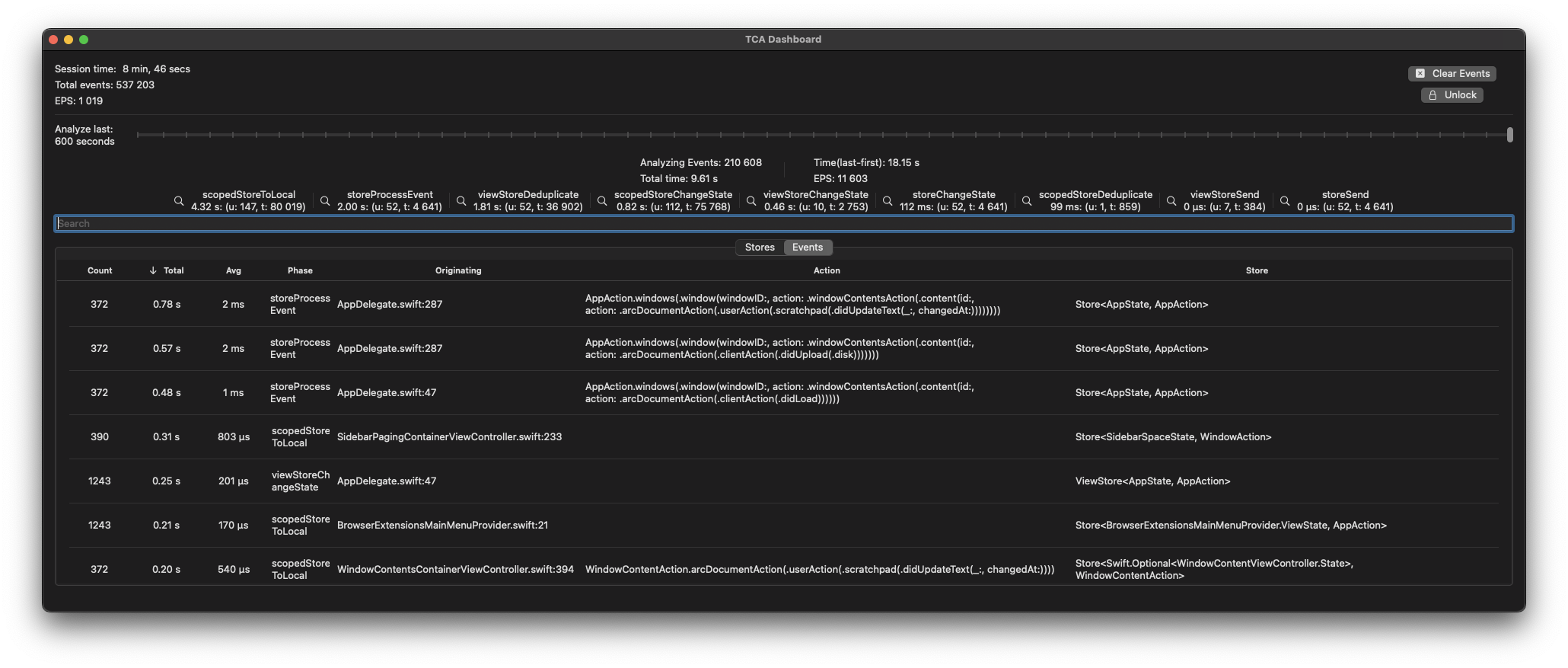

Performance Dashboard I’ve built for the product

Using a single store, we saw 210 000 events being processed and CPU time taking **9.61s. **In comparison, when we disconnected the notes module from a single store and instead created a separate store for it and only connected the relevant information, we saw numbers go down to 17 284 events processed and total CPU Time taking 0.91s.

Performance Dashboard I’ve built for the product

Using a single store, we saw 210 000 events being processed and CPU time taking **9.61s. **In comparison, when we disconnected the notes module from a single store and instead created a separate store for it and only connected the relevant information, we saw numbers go down to 17 284 events processed and total CPU Time taking 0.91s.

That’s an order of magnitude difference.

ℹ️

Events are not actions as we track multiple events per action, but the numbers here are taken from the same scenarios and, as such, are directly comparable.

Multi-Store Architecture

🤔

If only considering performance, a simple application might not need multi-store. A Vanilla TCA approach with a single store would still be a viable option for most small apps.

When we talk about multi-store architecture, what we mean is that we’d no longer be using direct scoping for our distinct features. Instead, you’d be creating a standalone Store object that would manage its own state.

But how do we connect independent stores together to build an application?

UDF Modules with dependency injection

We will define each feature as black boxes with rigid Input / **Output **and connect them using dependency injection. This treatment allows us to ensure firm API boundaries and increase both code comprehension (explicitness) and avoid other parts of the app depending on internal actions.

Data modeling

The multi-store pattern will yield performance benefits when a feature and the containing application do not need to share much data, and there are infrequent updates necessary to the root application state.

Arc’s Notes was a good candidate because its data modeling was such that it produces actions at a high frequency, but a small number of those have an impact on the entire application.

Even though you are isolating your feature into its own store, you can still use standard TCA approaches in that context, so use whatever scope / pullback configurations you want, the main benefit is that this is all internal feature implementation detail rather than something that leaks into your main app abstraction layer.

App <> Feature Communication

Let’s look at how we can set up a communication pipeline for a multi-store setup.

struct FeatureState {

let value: Int

}How do we deliver inputs from the Application to the Feature

The simplest way to communicate from the Application to the Feature is through the Publisher pipeline.

If we want to forward some data, then at the feature integration level (in the app codebase), we can grab the scoped app viewStore.publisher and pass it as one of the dependencies into FeatureEnvironment

// FeatureModulestruct FeatureInputs {

let number: Int

}

struct FeatureEnvironment {

let inputPublisher: AnyPublisher<FeatureInputs, Never>

}

// an application integration point

let featureEnvironment = FeatureEnvironment(

inputPublisher: ViewStore(appStore.scope(\.featureInputs)).publisher

)

let feature = FeatureIntegration

.create(initial: ..., environment: featureEnvironment)

...Feature vs App integration points

Depending on the kind of feature you are building, you might also be able to pass in data client and use that to directly observe the state in the FeatureModule itself, rather than passing it through the application.

🤔

Passing in App Environment objects into your specific feature integration can be messy in the current main of TCA since you’d either need to pass in through Dependency Injection or expose as some global (ugh).

It gets much nicer with the upcoming ReducerProtocol update.

If instead of data, we want to forward intents (requests/actions), then we’d have a Publisher injected into both our FeatureEnvironment and the underlying source e.g. PassthroughSubject injected into AppEnvironment, that way, we can send requests from the Application to the Feature, even if they are not related to the data of the app.

Feature -> Application

To go the other way around, as in for the Application to observe Feature updates, we use a Delegate Action pattern, e.g.

let delegate = PassthroughSubject<FeatureDelegateAction, Never>()

let featureBox = FeatureIntegration

.create(

initial: initial,

environment: .init(..., delegate: delegate)

)

delegate.sink { delegate in

appViewStore.send(.featureActionDelegate(delegate))

}

.store(in: &subscriptions)When to use multi-store?

Performance

If you are only concerned about performance, then I’d only choose multi-store if you share very little data between the feature and the application state and you exhausted other optimization options (e.g., instrumenting and optimizing scoping functions).

Boundaries

If you want to ensure the stronger API Boundries that I have previously discussed, multi-store forces your team to maintain them because features become Black Boxes.

When a Feature module becomes a black box, your whole state/action, and reducer concepts become internal implementation detail, they are not exposed to the consumers of your features. Your public interface becomes very small and easy to test and verify.

This small surface area is easier to maintain and helps incremental builds because the public surface changes very rarely, and you could even swap out the actual implementation of a feature with a fake.

Risk Reduction

If you want to lower the business risk for your application, having clear boundaries across your features means they can use any architecture pattern. Furthermore, the architecture pattern could change over time: maybe you start with TCA but decide you want to use a different pattern just in this particular Feature case. With a minimal public interface, you could replace the whole feature implementation without touching any other part of the main application.

And if any time in the future you decide you want to migrate off TCA for whatever reason, you could do it incrementally with much more ease than with a vanilla single-store approach.

Conclusion

A Unidirectional Data Flow architecture that doesn’t provide a dependency diffing tree will eventually cause performance issues as your project grows since the cost of actions going through the system will constantly increase.

If you decide to use multi-store architecture for your project, it will force your engineers to think a bit more about data modeling because the boundaries are much firmer in the case of total separation, but that also means that if you ever decide to abandon TCA as the leading architecture pattern of your application, features could still use it and be integrated into another architecture pattern and migrated incrementally (if at all). Thus limiting your business risk to a framework/architecture pattern.